Optimizing Delta Lake Tables: Liquid Clustering vs. Partitioning with Z-Order

This guide will help you decide on your clustering methodology in Lakehouse

Optimizing query performance is crucial in the realm of big data. Delta Lake, a popular open-source storage layer, offers two primary methods for organizing data: liquid clustering and partitioned Z-order tables. This blog post will guide you through the decision-making process of choosing between these two approaches.

Traditional relational databases use indexing to speed up data retrieval. However, in data warehouses and lakehouses storing massive amounts of data, separate index structures become impractical due to storage overhead. Instead, these systems use clustering to sort table data physically, achieving similar performance benefits without additional storage costs.

Clustering in Delta Lake serves a similar purpose to indexing in relational databases. It enhances query performance by organizing data based on frequently accessed columns. The critical distinction is that clustering physically sorts the data within the table rather than creating separate index structures.

Understanding Liquid Clustering and Partitioned Z-Order Tables

Liquid Clustering

Liquid clustering is a newer algorithm for Delta Lake tables that offers several advantages:

Ability to change clustering columns at any time, which means data engineering does not need to decide what the query patterns would look like up

Optimized for unpartitioned tables

Doesn't re-cluster previously clustered files

Liquid clustering relies on optimistic concurrency control (OCC) to handle conflicts when multiple writes write to the same table.

Partitioned Z-Order Tables

Partitioning combined with Z-ordering is a more traditional approach that:

Allows for greater control over data organization

Supports parallel writes more effectively

Enables fine-grained optimization of specific partitions

However, data engineering must be aware of querying patterns up front to choose a partition column.

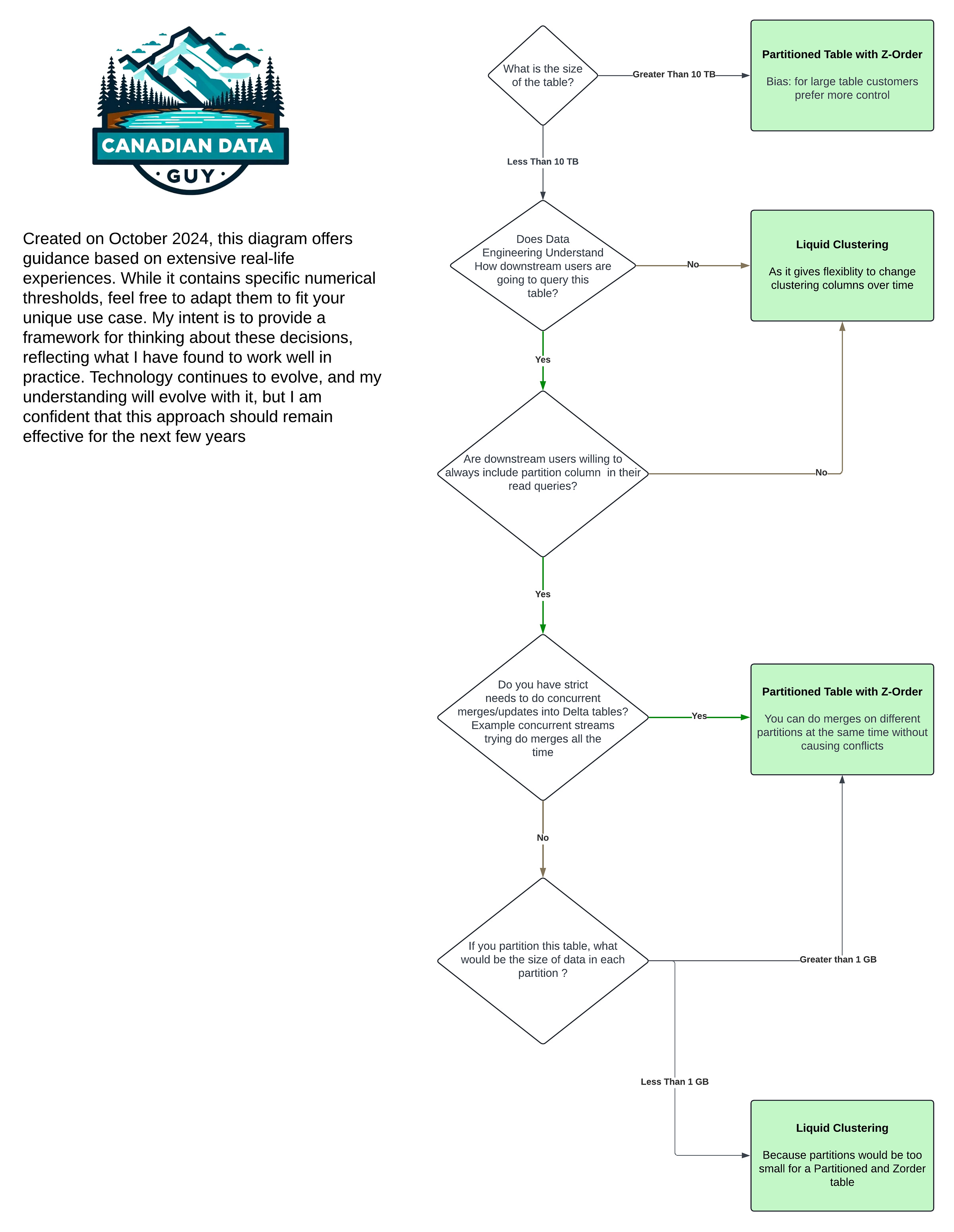

Decision Tree

Factors to Consider When Choosing

Table Size

Small tables (< 10 GB): Liquid clustering is generally sufficient and recommended.

Medium tables (10 GB–10 TB): Either approach can work, depending on other factors.

Large tables (> 10 TB): Partitioning with Z-ordering is often preferred for more control.

Concurrent Write Requirements

Partitioning is recommended for tables requiring multiple processes to write simultaneously, especially for update operations. It allows better management of concurrent writes and updates. Appends are less concerning, as they don't create conflicts when multiple writers access the same table.

Query Patterns

If users consistently include the partition column in their queries, partitioning can be very effective.

Liquid clustering may be more suitable for more flexible query patterns where users may not always include the partition column.

Data Distribution

Date-based data (e.g., clickstream data) often benefits from partitioning.

For data without a clear partitioning strategy, liquid clustering may be better.

Partition Cardinality

Aim for fewer than 10,000 distinct partition values.

Each partition should contain at least 1 GB of data.

Real-World Example: Amazon Clickstream Data

Let's consider a real-world scenario using Amazon's clickstream data:

The table stores 3 years of data for 10 countries

Partitioning by click date results in approximately 1,000 partitions (365 * 3)

10 countries * 1,000 date partitions = 10,000 total partitions

This setup is within the recommended partition count (< 10,000) and provides good control over the data. Here's how we might structure this table:

Partition by

click_date, countryZ-order by

merchant_id, andadvertiser_id

Optimizing the Partitioned Table

To maintain optimal performance, you can run a daily optimization job on the newest partition:

OPTIMIZE table_name

WHERE click_date = 'ANY_DATE' and country = 'CANADA'

ZORDER BY ( merchant_id, advertiser_id)

This approach ensures good performance for date-range queries and lookups on Z-ordered columns.

Parallel Write Considerations

Partitioning becomes crucial when you need parallel writes, such as processing data from multiple countries simultaneously. For example:

US data coming from a US Kafka topic

Canada data coming from a Canada Kafka topic

Both streams need to write to the same table in parallel and in real-time. Partitioning allows these writes to occur without conflicts, especially for append operations.

Optimistic Concurrency Control

Delta Lake uses optimistic concurrency control to manage parallel writes. Here's how it works:

Writers check the current version of the Delta table (e.g., version 100).

They attempt to write a new JSON file (e.g., 101.json).

Only one writer can succeed in creating this file.

The "losing" writer checks if there are conflicts with what was previously written.

If no conflicts, it creates the next version (e.g., 102.json).

This approach works well for appends but can be challenging for updates, especially when multiple writers are trying to modify the same files.

Partition Column Selection

When choosing a partition column:

Select immutable columns (e.g., click date, sale date)

Avoid high-cardinality columns like timestamps

For timestamp data, create a derived date column for partitioning

Potential Pitfalls and Best Practices

Here are some key considerations and common mistakes to avoid:

Over-Partitioning: A common mistake is creating too many partitions. While partitioning helps with performance, too many partitions can result in overhead. A good rule of thumb is to keep partition counts under 10,000. For example, if you're storing three years of daily click data, partitioning by

click_datewould result in around 1,000 partitions for three years—well within the 10,000-partition guideline.Partitioning on High Cardinality Columns: Avoid partitioning on high cardinality columns (e.g., timestamps). This would result in too many partitions, leading to performance degradation. Instead, partition on a date column and ensure it has enough data per partition.

Partition Size: To avoid the performance penalties associated with small partitions, each partition should hold at least 1 GB of data.

Schedule Optimization: Regularly run the OPTIMIZE command.

For partitioned tables, ensure new partitions are clustered and Z-ordered for efficient querying. Including the WHERE clause is important because the Zorder algorithm has a limitation: it can cluster files that were already clustered.

For Liquid Clustered tables, life is simple, run optimize at a cadence it will not recluster files it has clustered before.

Conclusion

Choosing between liquid clustering and partitioned Z-order tables depends on various factors including table size, write patterns, and query requirements. For smaller tables or those without clear partitioning strategies, liquid clustering offers simplicity and efficiency. Larger tables or those with concurrent write needs often benefit from partitioning with Z-ordering.

Liquid Clustering is the right choice if:

Your table is small to medium-sized (less than 10 TB).

You have fewer concurrent writers or need a simpler approach.

Users don't consistently query with a partition column.

Partitioning with Z-Order is the better option if:

Your table is ultra-large (more than 10 TB).

You have multiple parallel writers or complex writing requirements.

Users frequently query with the partition column.

Always consider your specific use case and be prepared to test both approaches to determine the best fit for your data and query patterns. The right choice will significantly impact your query performance and overall data management efficiency.

Disclaimer: The views expressed in this blog are my own and do not represent official guidance from Databricks.

References

https://www.databricks.com/blog/2018/07/31/processing-petabytes-of-data-in-seconds-with-databricks-delta.html

https://docs.databricks.com/en/delta/clustering.html

https://www.databricks.com/blog/announcing-general-availability-liquid-clustering